What is the use of SQL Server database snapshot and how to create SQL Server database snapshot?

Database snapshots are available only in SQL Server 2005 Enterprise Edition and later versions. All recovery models support database snapshots.

A database snapshot is a read-only, static view of the source database.Multiple snapshots can exist on a source database and always reside on the same server instance as the database. Each database snapshot is transactionally consistent with the source database as of the moment of the snapshot’s creation.A snapshot persists until it is explicitly dropped by the database owner.

Snapshots can be used for reporting purposes.In the event of a user error on a source database, you can revert the source database to the state it was in when the snapshot was created. Data loss is confined to updates to the database since the snapshot’s creation.

Creating a series of snapshots over time captures sequential snapshots of the source database. Each snapshot exist until it is dropped.Each snapshot will continue to grow as pages in original database are updated, you may want to conserve disk space by deleting an older snapshot after creating a new snapshot.

Steps to create database snapshot in SQL Server:

1.Create a new database or use existing database to create the database snapshots.

Syntax:

USE master

GO

— Create database

CREATE DATABASE test

GO

USE test

GO

2.Create table and Insert values into table.

Syntax:

–create a Table

CREATE TABLE test1 (a INT, b INT,c INT)

—Insert values

declare @a nchar(10)

set @a=1

while (@a<5)

begin

insert into test1 values (@a,’1′,’1′)

set @a=@a+1

end

3.Create a snapshot for a source database.

Syntax:

— Create Snapshot Database

CREATE DATABASE Snapshottest ON

(Name =’test’,

FileName=’C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\testsnap.ss’)

AS SNAPSHOT OF test;

GO

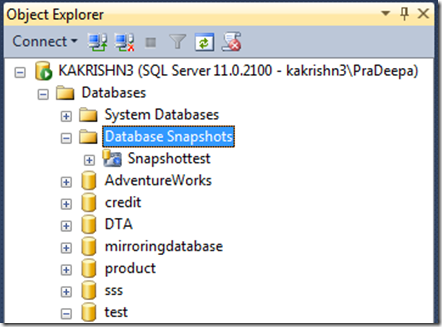

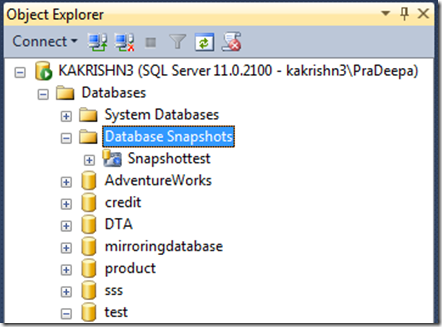

Snapshottest database is created successfully.

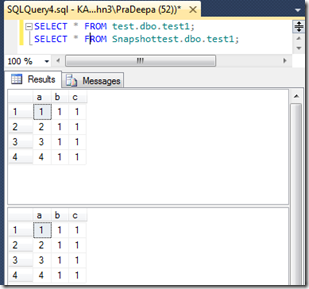

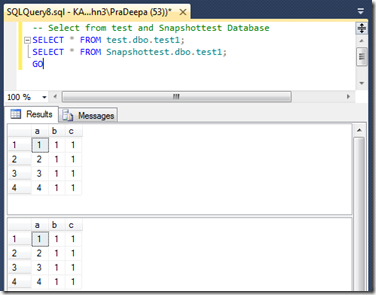

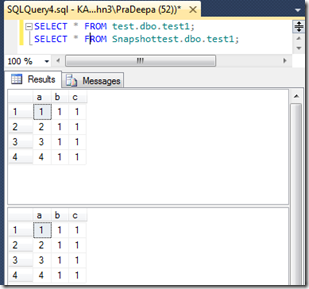

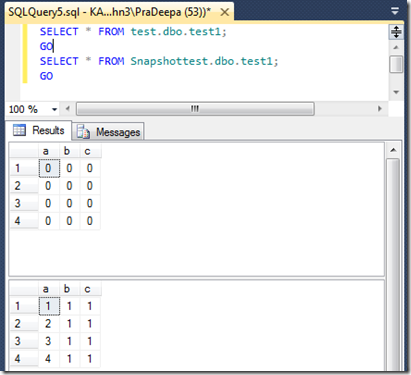

4.Now let us view both the source database and snapshot database.

Syntax:

SELECT * FROM test.dbo.test1;

Go

SELECT * FROM Snapshottest.dbo.test1;

Go

Data’s in tables of source and snapshot database are same.

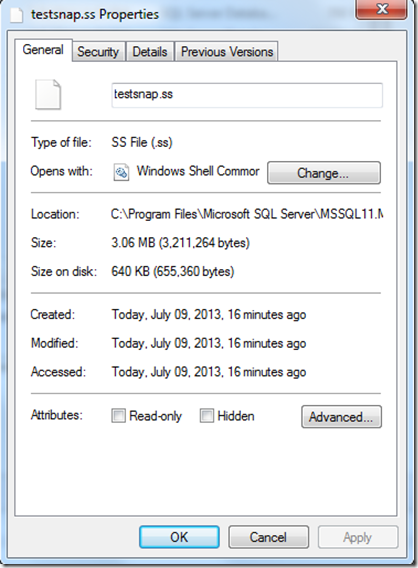

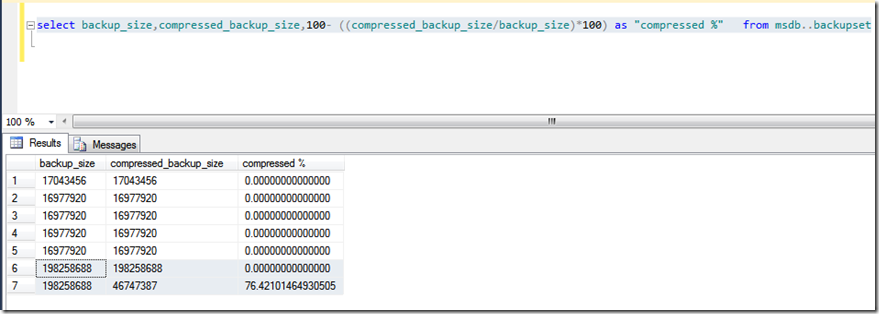

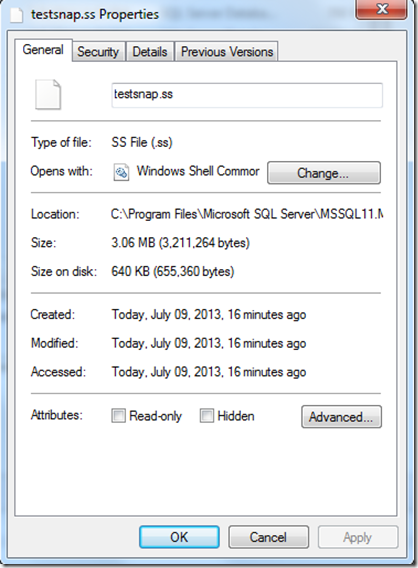

Size on disk of the testsnap .ss could be viewed by opening its properties window.Let us note the size on disk of the source database.

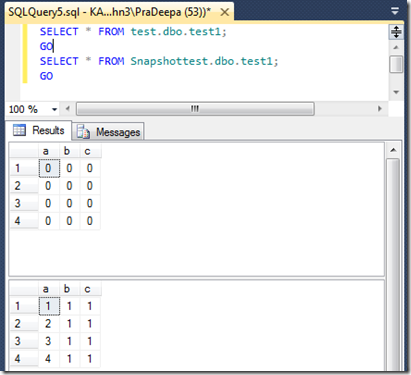

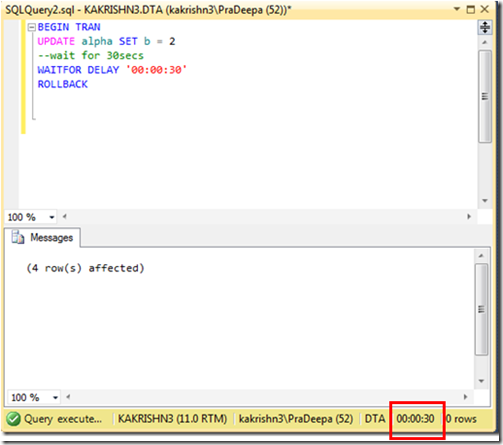

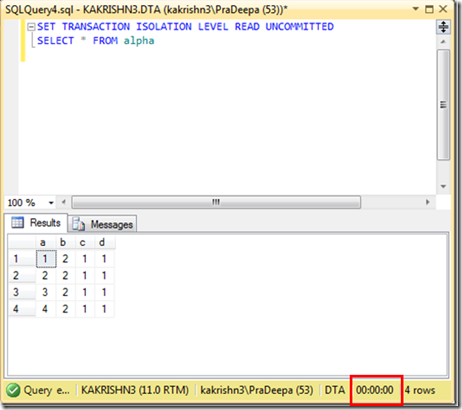

5.Let us update existing rows in the table.

Syntax:

update test1 set a=0,b=0,c=0

6.After updating rows ,let us view the source and snapshot database.The values are updated only in source database,not in snapshot database.Because snapshot database is consistent with the source database as of the moment of the snapshot’s creation.

Syntax:

— Select from test and Snapshottest Database

SELECT * FROM test.dbo.test1;

SELECT * FROM Snapshottest.dbo.test1;

GO

But size on disk is increased,It clearly shows that when we update data in Source database, it copies the old/existing data pages to Snapshot database. This is the reason why the size of the snapshot database is increasing while updating the source database.

7.We could revert the source database.Reverting overwrites updates made to the source database since the snapshot was created by copying the pages from the sparse files back into the source database.

Syntax:

— Restore old data from Snapshot Database

USE master

GO

RESTORE DATABASE test

FROM DATABASE_SNAPSHOT = ‘Snapshottest’;

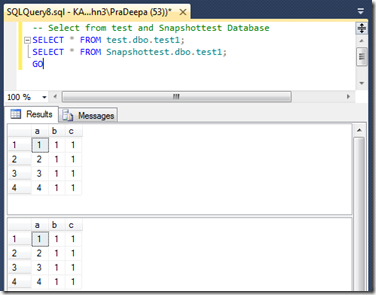

8.View the data in the tables from the source and the snapshot database after the restore from snapshot.

Syntax:

SELECT * FROM test.dbo.test1;

Go

SELECT * FROM Snapshottest.dbo.test1;

Go

9.We drop the snapshot database like any other database using drop database command.

Syntax:

— drop snapshottest

DROP DATABASE [snapshottest];

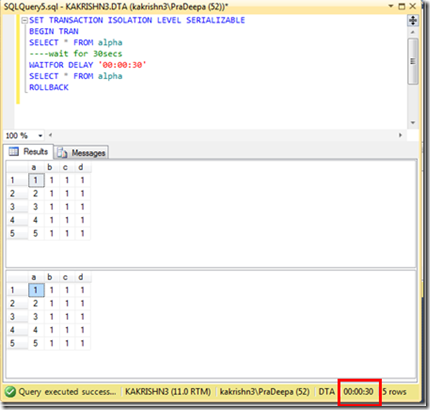

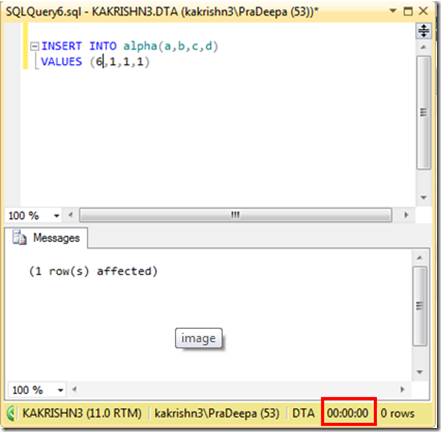

10. We can also create database snapshot on mirror database for load balancing.

![clip_image001[8] clip_image001[8]](https://sqlserverscribbles.files.wordpress.com/2013/07/clip_image0018_thumb4.png?w=631&h=524)